Black Mirror Predicted the Future of AI in Education? Dangers of AI and How Educators Can Prepare

The recent rise and popularity of ChatGPT and other Artificial Intelligence (AI) tools in education like Otter AI, Woebot, Tome AI, ClassPoint AI and many more have generated significant debate among educators. More often than not, the initial excitement educators have towards these revolutionary AI tools is greeted immediately by concerns about the risks and challenges of implementing AI in education, from the ethical implications of AI and cheating to ultimately the greatest concern of all, the potential replacement of human teachers.

This is not it. There are many darker truths and risks about AI that are hidden from public discussions. Today, we will focus on the troubling risks of AI with episodes from the famous Black Mirror TV show. Previously just tales of potential pitfalls of AI, they are now becoming a reality. We will discuss whether these dangers of AI in education should be valid concerns of educators. Of course, on the brighter side, we will also explore the opportunities that educators can take advantage of from these seemingly scary realities, and better prepare for a better future of education.

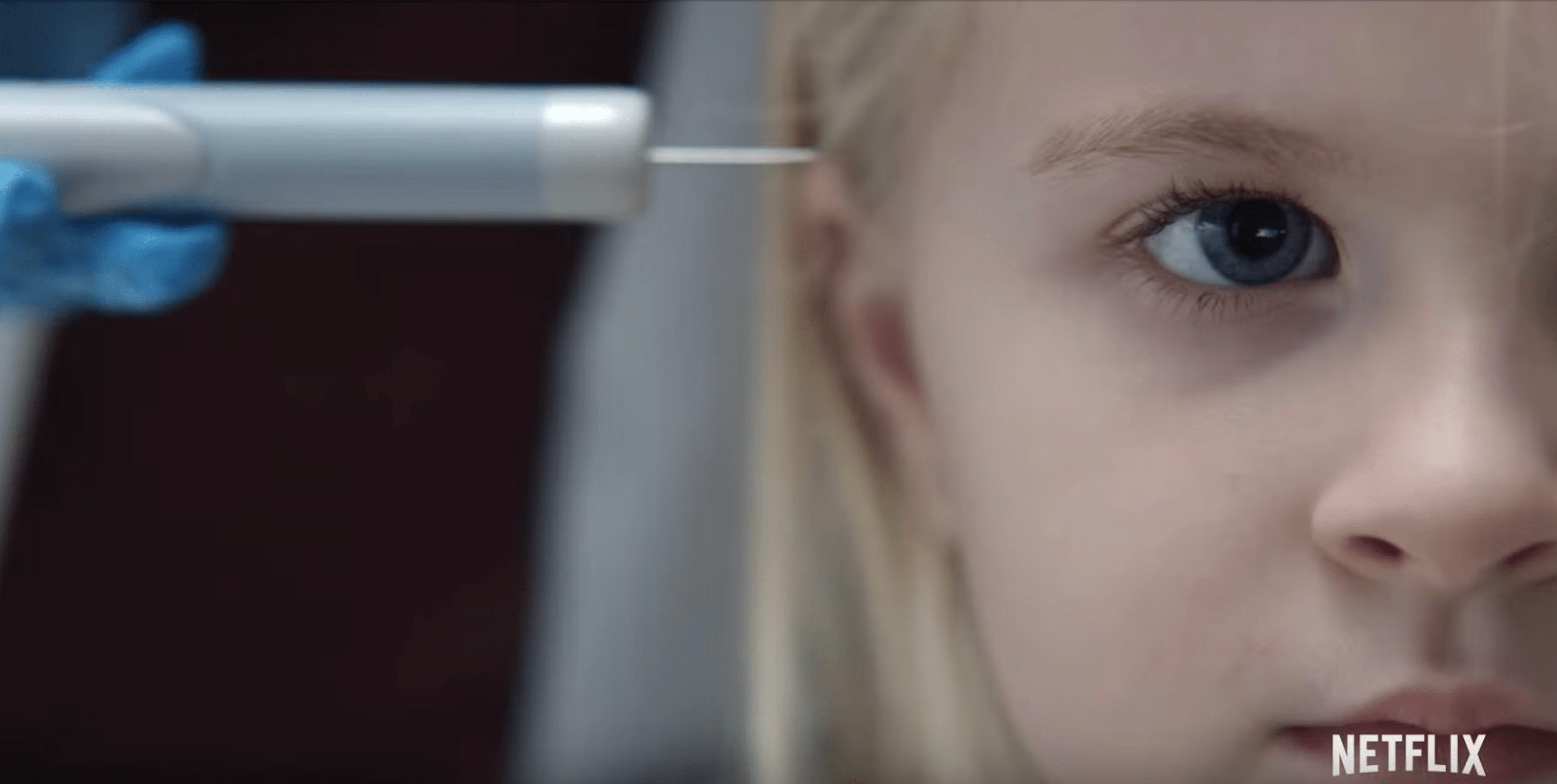

Black Mirror Season 4 Episode 2 Arkangel Predicted Excessive AI Surveillance

Summary: The episode “Arkangel” in Black Mirror explores the theme of excessive AI surveillance by depicting a world where a mother named Marie has access to a highly intrusive monitoring system in the form of a chip implanted in her daughter, Sara’s brain that allows her to monitor her daughter’s every detail, including her location, real-time biological data, and even what she sees. The device also allows the mother to censor any brutal or negative scenes or sights that can potentially give her emotional distress, which resulted in the loss of freedom and stifling of growth and development of Sara.

AI Surveillance in Education: AI surveillance tools in education are not new. Black Mirror Arkangel rightly predicted and echoed the real fears of educators who are worried about the implications of AI technology on student privacy, intellectual freedom, and independence. For instance, an increasing number of classrooms in China are equipped with AI cameras and brain-wave trackers, to smart pens that track students and make sure they actually complete their homework. These AI surveillance systems risk creating a classroom environment of conformity and control that undermines the value of self-directed learning.

Opportunities for Educators: When used appropriately with proper guidelines and barriers, AI surveillance tools can actually provide real benefits for students. For instance, AI-powered learning analytics tools like Brightspace Analytics and Carnegie Learning can analyze students’ learning patterns, identify areas where students are struggling, and provide personalized recommendations for improvement. Student Intervention tools Like Blackboard Predict can also be used to analyze student data, such as attendance and grades to identify students who may be at risk of dropping out or needing additional support, enabling educators to intervene and provide targeted interventions.

Black Mirror Season 4 Episode 4 Hang the DJ Predicted Over-reliance on AI on Decision Making

Summary: The episode “Hang the DJ” in Black Mirror features an advanced matchmaking system called “Coach” that measures the love compatibility of individuals and dictates the length and number of relationships an individual goes through. The two protagonists that are deeply in love with one another rebel against the system that continuously separates them and pairs them with other partners. Ultimately they discover that they are actually part of a simulation designed to test the compatibility of their real-life counterparts and their rebellious acts of choosing each other signify their desire for genuine agency and authentic connection.

AI Decision Making Education: There is currently no existing advanced matchmaking AI system. Though there are AI tools that help educators make decisions in their teaching, from AI tools in Academic and Career guidance like Naviance and BridgeU and College Admissions like ZeeMee to Student Interventions like Starfish.

Opportunities for Educators: Black Mirror Hang the DJ serves as a reminder to all educators that blind reliance on AI in decision-making is dangerous. While it is tempting and time-saving to let AI make all the decisions, trusting them blindly, especially on important choices such as student education opportunities and career choices is foolish.

As educators, we should also continuously assess and evaluate the effectiveness and impact of AI tools in the classroom. By critically evaluating AI systems, teachers can make better and more informed decisions about their usage and identify areas where human intervention may be more appropriate.

Not only teachers, but students are also susceptible to letting AI make all the decisions in their learning journeys. A big concern of many educators nowadays is the surge in plagiarism in school work with the use of AI language models like ChatGPT. However, instead of banning these AI tools in schools, we should teach our students the ability to evaluate the effectiveness of AI tools and AI-generated content so they remain in charge of their own learning decisions.

Black Mirror Season 2 Episode 1 Be Right Back Predicted Communication With the Deceased

Summary: One of the topmost rated episodes of Black Mirror episode, Be Right Back explores the story of a woman named Martha who tragically lost her partner in a car accident, and eventually sought solace through an AI that completely mimicked her deceased partner, Ash’s personality, memories and behaviors, first through an AI chatbot, and then eventually through a realistic substitute that was designed to look exactly like Ash.

AI Chatbots to Communicate with the Deceased in Education: While not as realistic as the AI technology we see in Black Mirror, currently, there are existing AI chatbots such as Replika that can simulate a human conversation partner and can potentially allow users to simulate a conversation with a deceased person.

Opportunities for Educators: Setting the ethical implications aside of replicating individuals, this AI technology could be helpful for simulation and providing a more immersive and exciting learning experience for our students. For now, educators can also get students involved in simulating conversations with influential historical figures or thinkers via AI chatbots like Hello History and ChatGPT as shown here. Of course, these are not genuine conversations with the deceased, but students can certainly benefit from the perspectives of the historical figures and use them to enrich their arguments and learning.

Black Mirror Season 5 Episode 3 Rachel, Jack and Ashley Predicted Loss of Human Connection

Summary: In the Black Mirror episode “Rachel, Jack and Ashley Too”, the story revolves around Ashley Too, an AI assistant doll created based on Ashley O, a popular pop star’s personality, and a girl named Rachel who owned Ashley Too who idolizes Ashley O. Rachel receives an Ashley Too doll as a birthday gift. However, her initial fondness of Ashley Too was quickly replaced with unease as she discovered that Ashley O was in a comatose state while her manager exploits her consciousness to maintain her public persona through Ashley Too.

AI for Student Support in Education: One of the greatest resistance of educators towards AI has to do with the limitations of AI in providing authentic emotional and humanized learning experiences. There are existing emotional support chatbots like Woebot and Ellie that can offer emotional support and mental health resources to students by offering a non-judgmental space for students to express their thoughts and emotions, as well as intelligent tutoring systems like KAI and Carnegie Learning that provide personalized instruction and support to students through analyzing individual learning patterns and areas of weakness to deliver targeted feedback and learning materials.

However, these AI tools lack emotions and human empathy which is essential in understanding the complexities of human emotions and the personal human connection that students need to feel motivated, understood and engaged. These limitations pose a risk in developing a generation of students who are emotionally detached and lack the necessary skills to build meaningful relationships.

Opportunities for Educators: To prepare for the future of education, educators have to prioritize human connection, social interaction, and emotional support within the classroom to nurture a sense of connectedness, empathy, and understanding among students when AI cannot do so.

Black Mirror Season 3 Episode 6 Hated in the Nation Predicted AI Bias and Discrimination

Summary: The episode “Hated in the Nation” in Black Mirror tells a story where robotic bees with AI capabilities, called “ADIs” (Autonomous Drone Insects), are used for pollination due to the extinction of real bees. However, the AI system was hacked, turning the ADIs into killing machines. The AI system eventually displayed bias and targeted individuals who have been subjected to online hate campaigns.

AI Bias and Discrimination in Education: The Hatred in the Nation episode illustrates how AI systems can perpetuate bias and discrimination towards certain individuals or groups based on the data they are trained on. This is not uncommon in the real world. For instance, even the recent viral ChatGPT has been found guilty in this aspect. Users have reported instances where the models generated sexist, racist, or otherwise offensive content.

In education, this raises concerns about how AI teaching tools may exacerbate existing inequalities and biases in the classroom in areas such as grading, recommendations, career guidance or admissions. For instance, if the training data used to develop these AI systems contain biases, it can result in unfairness and discrimination in various aspects, from unfair grading based on factors unrelated to their actual performance, and unequal opportunities for underrepresented groups in college admissions, to career guidance based on gender and race stereotypes in society.

Opportunities for Educators: As educators, it is important to realize that the future of AI-driven education does not lie solely in the hands of companies and policymakers. We play a crucial role in shaping the future of education through educating ourselves and our students about the potential biases and limitations of AI systems and fostering critical thinking skills, encouraging students to question and evaluate the outputs of AI tools rather than accepting them at face value. We can, and we should also take proactive steps to collaborate with parents, policymakers, and AI developers to ensure future AI technologies in education are designed and implemented responsibly and for the benefit of our future generations.

Black Mirror Season 6? And How Educators Can Prepare for the Future of AI

Would Black Mirror Season 6 provide a better prediction of the future of education? We can only wait and see when the season is released.

The Black Mirror episodes so far have illustrated that the future of education is a complex interplay between humans and machines. AI has the potential to revolutionize education in unprecedented ways, but it is up to educators to harness its benefits while being mindful of its potential drawbacks.

By staying informed, critical, and adaptable, educators can help shape the future of AI in education and ensure that it serves the best interests of all students. Most importantly, educators should use AI as a supportive tool, not a replacement for teaching: AI can assist in tasks such as data analysis, personalized feedback, or content delivery, but it should not replace the teacher-student relationship.

All in all, teachers should feel excited rather than fearful about the future of AI in education because while AI is capable of many things that were never possible decades ago, teachers are the true catalysts of a future civilized, ethical, critical and humane generation that no AI could replace.

Comments

Post a Comment